Publications

Physiologically-inspired neural model for social interaction recognition from abstract and naturalistic videos

Neurophysiologically-inspired computational model of the visual recognition of social behavior and intent

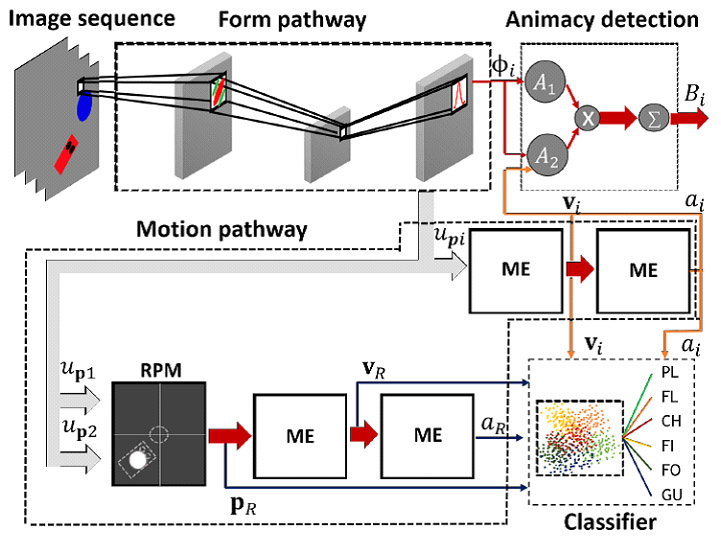

AIMS: Humans recognize social interactions and intentions from videos of moving abstract stimuli, including simple geometric figures (Heider {&} Simmel, 1944). The neural machinery supporting such social interaction perception is completely unclear. Here, we present a physiologically plausible neural model of social interaction recognition that identifies social interactions in videos of simple geometric figures and fully articulating animal avatars, moving in naturalistic environments. METHODS: We generated the trajectories for both geometric and animal avatars using an algorithm based on a dynamical model of human navigation (Hovaidi-Ardestani, et al., 2018, Warren, 2006). Our neural recognition model combines a Deep Neural Network, realizing a shape-recognition pathway (VGG16), with a top-level neural network that integrates RBFs, motion energy detectors, and dynamic neural fields. The model implements robust tracking of interacting agents based on interaction-specific visual features (relative position, speed, acceleration, and orientation). RESULTS: A simple neural classifier, trained to predict social interaction categories from the features extracted by our neural recognition model, makes predictions that resemble those observed in previous psychophysical experiments on social interaction recognition from abstract (Salatiello, et al. 2021) and naturalistic videos. CONCLUSION: The model demonstrates that recognition of social interactions can be achieved by simple physiologically plausible neural mechanisms and makes testable predictions about single-cell and population activity patterns in relevant brain areas. Acknowledgments: ERC 2019-SyG-RELEVANCE-856495, HFSP RGP0036/2016, BMBF FKZ 01GQ1704, SSTeP-KiZ BMG: ZMWI1-2520DAT700, and NVIDIA Corporation.

Physiologically-inspired neural model for social interactions recognition from abstract and naturalistic stimuli.

Neurophysiologically-inspired model for social interactions recognition from abstract and naturalistic stimuli

A Dynamical Generative Model of Social Interactions

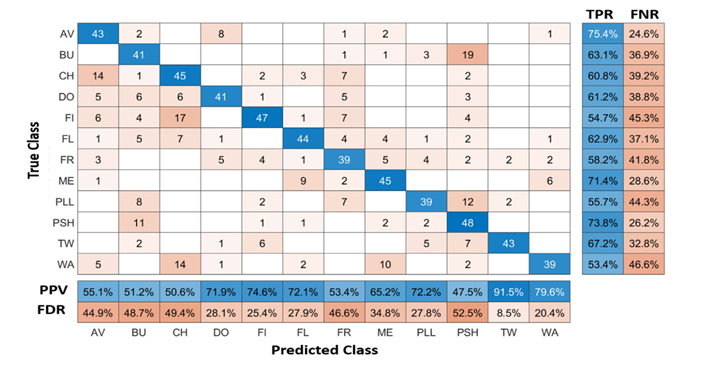

The ability to make accurate social inferences makes humans able to navigate and act in their social environment effortlessly. Converging evidence shows that motion is one of the most informative cues in shaping the perception of social interactions. However, the scarcity of parameterized generative models for the generation of highly-controlled stimuli has slowed down both the identification of the most critical motion features and the understanding of the computational mechanisms underlying their extraction and processing from rich visual inputs. In this work, we introduce a novel generative model for the automatic generation of an arbitrarily large number of videos of socially interacting agents for comprehensive studies of social perception. The proposed framework, validated with three psychophysical experiments, allows generating as many as 15 distinct interaction classes. The model builds on classical dynamical system models of biological navigation and is able to generate visual stimuli that are parametrically controlled and representative of a heterogeneous set of social interaction classes. The proposed method represents thus an important tool for experiments aimed at unveiling the computational mechanisms mediating the perception of social interactions. The ability to generate highly-controlled stimuli makes the model valuable not only to conduct behavioral and neuroimaging studies, but also to develop and validate neural models of social inference, and machine vision systems for the automatic recognition of social interactions. In fact, contrasting human and model responses to a heterogeneous set of highly-controlled stimuli can help to identify critical computational steps in the processing of social interaction stimuli.