Description

In this project we want to develop highly controllable face stimuli to study the neural basis of face processing and the analyses of the dynamics and structure of facial movements. For this purpose, we developed a computer graphics (CG) model of a monkey head based on MRI scans. The mesh is controlled by a ribbon-like muscle structure, linked to MoCap (Motion Capture) driven control points.

We have shown that Gaussian Process Dynamical Models (GPDM) are suitable for the generation of emotional motions, because they are able to convey very subtle style changes for the intendent emotion. We use GPDM with different emotional styles, which allows us to generate interpolated stimuli with exact control of the emotional style of the expression.

Psychophysics

Our studies investigated the perceptual representations of dynamic human and monkey facial expressions in human observers, exploiting photo-realistic human and monkey face avatars. The motion of the avatars was generated exploiting motion capture data of both primate species, which were used to compute the corresponding deformation of the surface mesh of the face.

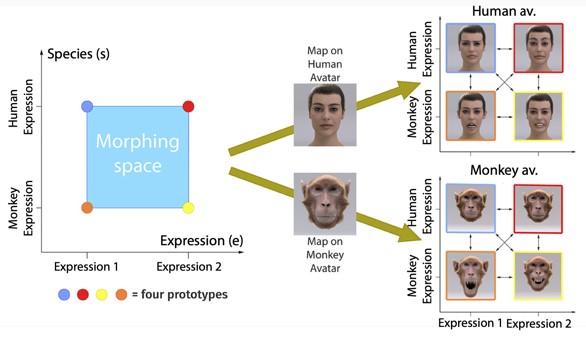

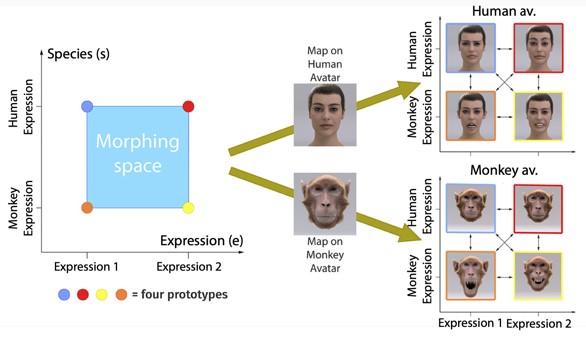

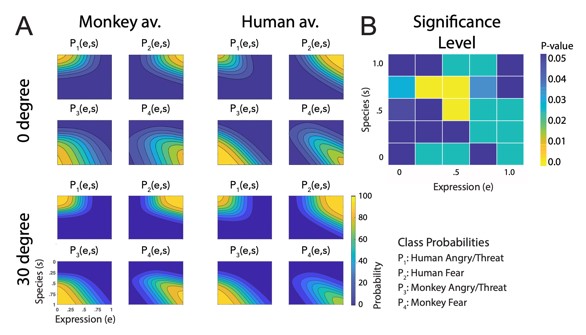

In order to realize a full parametric control of motion style, we exploited the Bayesian motion morphing technique to create a continuous expression space that smoothly interpolates between human and monkey expressions. We used two human expressions and two monkey expressions as basic patterns, which represented corresponding emotional states (‘fear’ and ‘anger/threat’). Interpolating between these four prototypical motions in five equidistant steps, we generated a set of 25 facial movements that vary in five steps along two dimensions, the expression type, and the species.. Each generated motion pattern can be parameterized by a two-dimensional style vector (e, s), where the first component e specifies the expression type (e1⁄40: expression 1 (‘fear’) and e1⁄41: expression 2 (‘anger/threat’)), and where the second variable s defines the species-specificity of the motion (s 1⁄4 0: monkey and s 1⁄4 1: human).

In order to realize a full parametric control of motion style, we exploited the Bayesian motion morphing technique to create a continuous expression space that smoothly interpolates between human and monkey expressions. We used two human expressions and two monkey expressions as basic patterns, which represented corresponding emotional states (‘fear’ and ‘anger/threat’). Interpolating between these four prototypical motions in five equidistant steps, we generated a set of 25 facial movements that vary in five steps along two dimensions, the expression type, and the species.. Each generated motion pattern can be parameterized by a two-dimensional style vector (e, s), where the first component e specifies the expression type (e1⁄40: expression 1 (‘fear’) and e1⁄41: expression 2 (‘anger/threat’)), and where the second variable s defines the species-specificity of the motion (s 1⁄4 0: monkey and s 1⁄4 1: human).

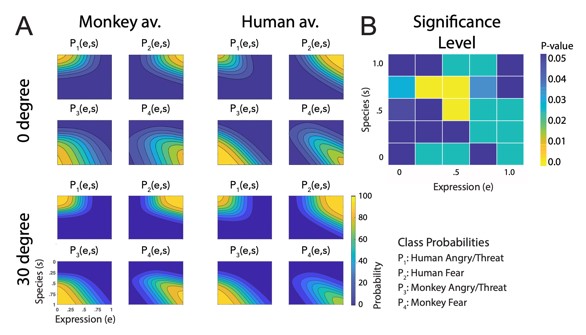

Our result implies that primate facial expressions are perceptually encoded largely independently of the head shape (human vs. monkey) and of the stimulus view. Especially, this implies substantial independence of this encoding of the two-dimensional image features, which vary substantially between the view conditions, and even more between the human and the monkey avatar model.

Our result implies that primate facial expressions are perceptually encoded largely independently of the head shape (human vs. monkey) and of the stimulus view. Especially, this implies substantial independence of this encoding of the two-dimensional image features, which vary substantially between the view conditions, and even more between the human and the monkey avatar model.

Norm-reference Encoding

We propose a mechanism for the recognition of dynamic expressions inspired by results on the norm-referenced encoding of face identity by cortical neurons in area IT. We have proposed before a neural model that accounts for these electrophysiological results on the norm-referenced encoding. We demonstrate here that the same principles can be extended to account for the recognition of dynamic facial expressions. The idea of norm- referenced encoding is to represent the shape of faces in terms of differences relative to a reference face, where we assume that this is the shape of a neutral expression.

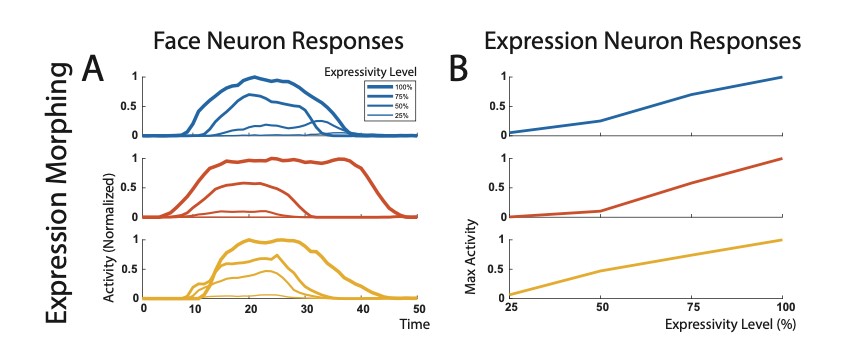

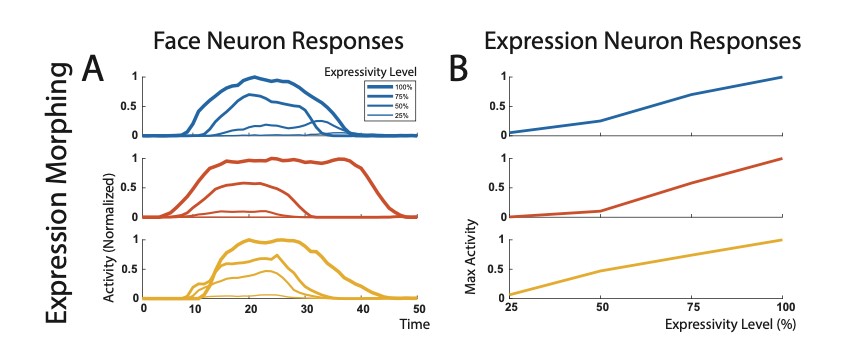

Interesting predictions emerged when the model is tested with the stimuli of variable expression strength, which were generated by morphing between the prototypes and neutral facial expressions. Here the Face neurons as well as the Expression neurons in the norm-based model show a gradual, almost linear variation of their activations with the expression level.

Interesting predictions emerged when the model is tested with the stimuli of variable expression strength, which were generated by morphing between the prototypes and neutral facial expressions. Here the Face neurons as well as the Expression neurons in the norm-based model show a gradual, almost linear variation of their activations with the expression level.

In order to realize a full parametric control of motion style, we exploited the Bayesian motion morphing technique to create a continuous expression space that smoothly interpolates between human and monkey expressions. We used two human expressions and two monkey expressions as basic patterns, which represented corresponding emotional states (‘fear’ and ‘anger/threat’). Interpolating between these four prototypical motions in five equidistant steps, we generated a set of 25 facial movements that vary in five steps along two dimensions, the expression type, and the species.. Each generated motion pattern can be parameterized by a two-dimensional style vector (e, s), where the first component e specifies the expression type (e1⁄40: expression 1 (‘fear’) and e1⁄41: expression 2 (‘anger/threat’)), and where the second variable s defines the species-specificity of the motion (s 1⁄4 0: monkey and s 1⁄4 1: human).

In order to realize a full parametric control of motion style, we exploited the Bayesian motion morphing technique to create a continuous expression space that smoothly interpolates between human and monkey expressions. We used two human expressions and two monkey expressions as basic patterns, which represented corresponding emotional states (‘fear’ and ‘anger/threat’). Interpolating between these four prototypical motions in five equidistant steps, we generated a set of 25 facial movements that vary in five steps along two dimensions, the expression type, and the species.. Each generated motion pattern can be parameterized by a two-dimensional style vector (e, s), where the first component e specifies the expression type (e1⁄40: expression 1 (‘fear’) and e1⁄41: expression 2 (‘anger/threat’)), and where the second variable s defines the species-specificity of the motion (s 1⁄4 0: monkey and s 1⁄4 1: human). Our result implies that primate facial expressions are perceptually encoded largely independently of the head shape (human vs. monkey) and of the stimulus view. Especially, this implies substantial independence of this encoding of the two-dimensional image features, which vary substantially between the view conditions, and even more between the human and the monkey avatar model.

Our result implies that primate facial expressions are perceptually encoded largely independently of the head shape (human vs. monkey) and of the stimulus view. Especially, this implies substantial independence of this encoding of the two-dimensional image features, which vary substantially between the view conditions, and even more between the human and the monkey avatar model. Interesting predictions emerged when the model is tested with the stimuli of variable expression strength, which were generated by morphing between the prototypes and neutral facial expressions. Here the Face neurons as well as the Expression neurons in the norm-based model show a gradual, almost linear variation of their activations with the expression level.

Interesting predictions emerged when the model is tested with the stimuli of variable expression strength, which were generated by morphing between the prototypes and neutral facial expressions. Here the Face neurons as well as the Expression neurons in the norm-based model show a gradual, almost linear variation of their activations with the expression level.