Perception of emotional body expressions depends on concurrent involvement in social interaction

Description:

Embodiment theories hypothesize that the perception of emotions from body movements involves an activation of brain structures that are involved in motor execution during social interaction. This predicts that, for identical visual stimulation, bodily emotions should be perceived as more expressive when the observers are involved in social motor behavior. We tested this hypothesis, exploiting advanced VR technology, requiring participants to judge the emotions of an avatar that reacted to their own motor behavior.

Based on motion capture data from four human actors, we learned generative models for the body motion during emotional pair interactions. We exploit the framework based on Gaussian Process Latent Variable Models (GP-LVM) that have been proposed as a powerful approach for high dimensional data modeling through dimensionality reduction. We have demonstrated that GP-LVMs are able to capture subtle emotional style changes and convey the information to human observers during reconstruction, passing the Turing test of computer graphics (Taubert et al., 2012).

Above: Trajectories generated by Style-GPDM in five interpolation steps between neutral and angry.

The task for participants was to tip the avatar on its shoulder from behind. After the tipping the avatar turned around in different emotional styles: fearful, angry in five interpolation steps. The participants had to rate the perceived emotion and repeated the classification non-interactively (replaying the observed trajectories from previous trials). The experiment has a balanced design with two groups, one with open loop and one with closed loop first). Experiment consisted of four blocks with 90 trials (open loop with training blocks first), where one block consists one emotional style (fearful, angry).

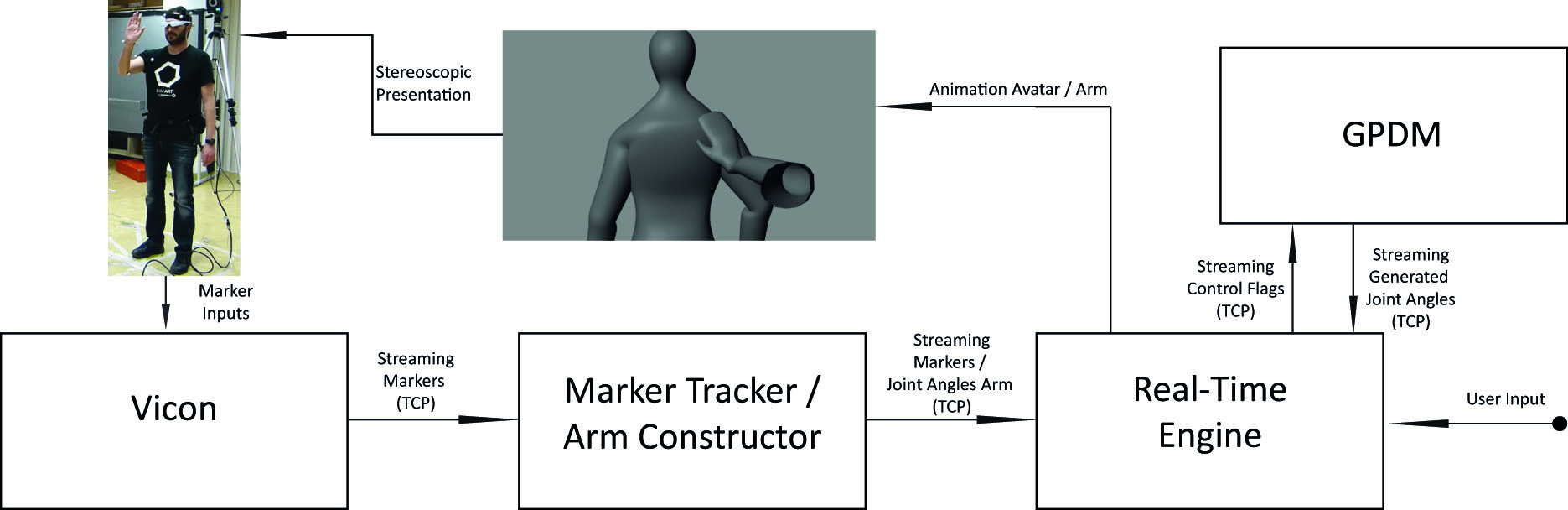

Above: the interactive system layout. From left to right: human marker positions are recorded with a Vicon system. A marker tracker infers the positions of occluded markers, the human arm is constructed from the full marker set. A synchronization server streams the arm representation and the interactive response motion generated by the hierarchical GPDM to the Ogre 3D Real-Time Engine for rendering. The resulting movie is displayed on a head-mounted display worn by the acting human (far left). Below: the system in action.

Emotional expressiveness of the stimuli was rated higher when the participants initiate the emotional reaction of the avatar in the VR setup by their own behavior, as compared to pure observation (F(1,17) = 8.701 and p < 0.01, N = 18). This effect was particularly prominent for anger expressions.

Consistent with theories about embodied perception of emotion, the involvement in social motor tasks seems to increase perceived expressiveness of bodily emotions. In future work we will test the hypothesis with other emotions, e.g. happy,sad.