Interactive Emotional Avatars Model realized with Sparse Gaussian Process Dynamical Models

Description:

It is possible to use the GP-LVM as a building block for hierarchical architectures [Lawrence & Moore, 2007], making it possible to model the conditional dependencies induced by the coordinated movements of multiple actors / agents in an interactive setting. In [TaubertEtAl2011KI] , we learned the joint statistics of interactive handshake movements (represented as joint angles from motion capture of two actors) using a hierarchical GP-LVM approach. We validated the realism of the movements of the developed statistical model by psychophysical experiments and find that the generated patterns are virtually indistinguishable from natural interactions.

|

|

|

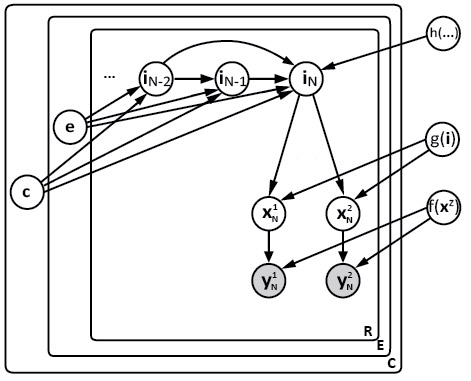

Left: graphical model representation of interaction between a couple of avatars. A couple C consists of two actors ∈ {1; 2}, which performed R trials of handshakes with N time steps per each trial and emotional style e. y: ob- served joint angles and their latent representations x for each actor. The x are mapped onto the y via a shared function f(x) which has a Gaussian process prior. i: latent interaction representation, mapped onto the individual actors’ latent variables by a function g(i) which also has a Gaussian process prior. The dynamics of i are described by a second order dynamical model with a mapping function h(i,c,e), which depends on the variables from two preceding time steps. Right: trajectories in the latent spaces (top), and corresponding graphical output (bottom). Human observers rate these movies as very natural, i.e. they pass the Turing test of computer graphics. From [TaubertEtAl2012SAP] .

Moreover, we found almost no accuracy difference for emotional style recognition between human-generated and model-generated movements:

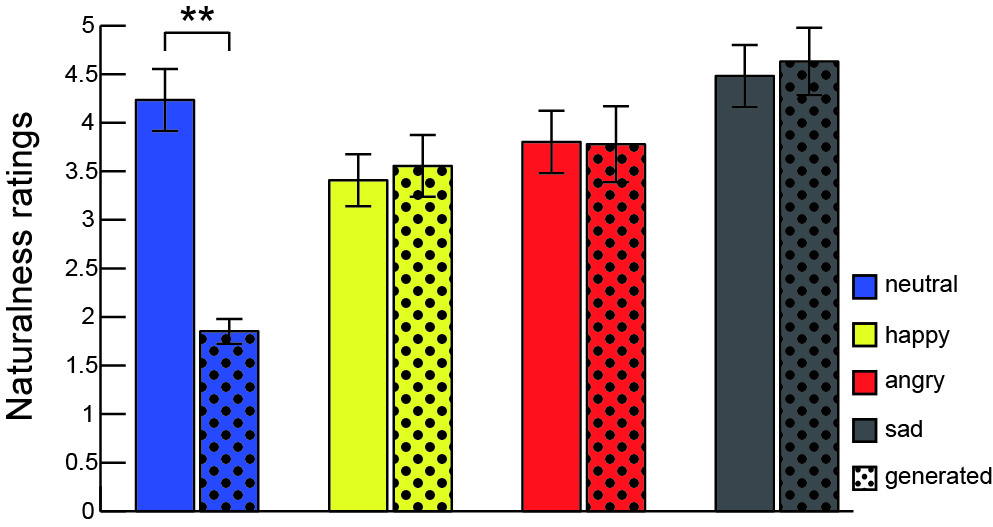

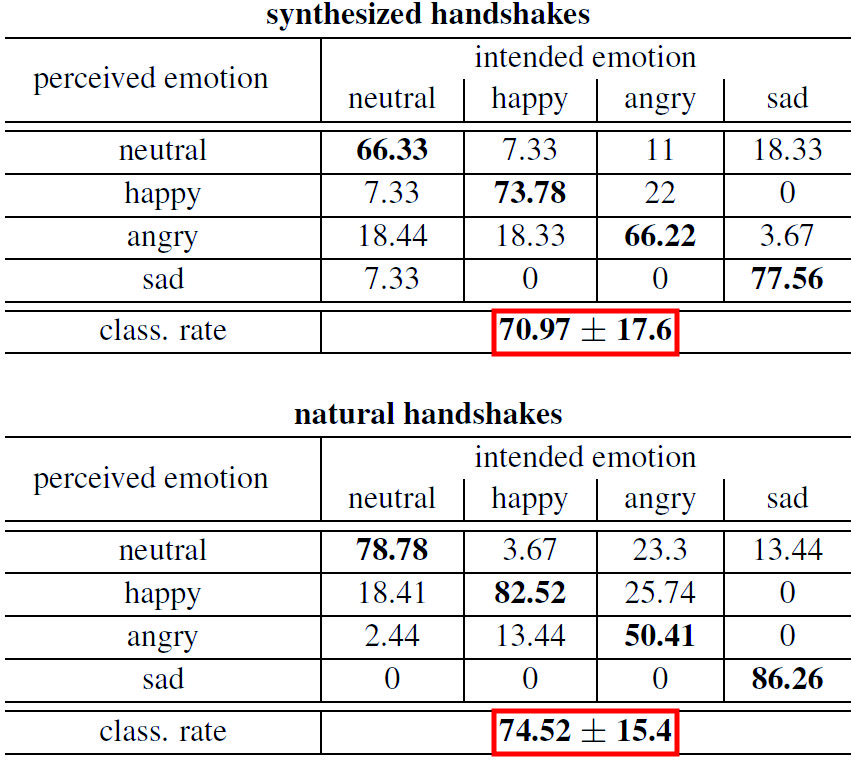

Left: mean ratings of naturalness for original (solid colored) and synthesized (dotted colored) handshake movements. On average, the naturalness ratings are comparable for both, synthesized and original movements. Participants were unable to distinguish original from synthesized movements (p ≤ 0.01, Wilcoxon rank-sum test), i.e. our animations pass the Turing test of computer graphics. Error bars show standard errors. Right: classification results. Emotion classification of synthesized (top) and natural (bottom) handshakes. Intended affect is shown in columns, percentages of subjects´ (N=9) responses in rows. Bold entries on the diagonal mark rates of correct classification. class. rate: overall mean correct classification rate ± standard deviation across subjects for generated and natural movements. This standard deviation, a measure for the agreement between subjects, is similar for synthesized and natural handshakes. From [TaubertEtAl2012SAP] .

In [TaubertEtAl2012SAP] , we refine this approach to enable on-line synthesis of emotional movements. Many existing synthesis algorithms, e.g. based on motion morphing or simple generative models, lack the capability to react on-line to movements of an interaction partner. This is also difficult to achieve with offline synthesis approaches, e.g. by motion capture and subsequent filtering or interpolation between stored trajectories [Bruderlin & Williams 1995]. Therefore, we proposed a new algorithm which offers the possibility to represent fully stylized motion as part of a dynamical control system, based on a Gaussian process dynamical model (GPDM) [Wang et al. 2007]. GPDMs are basically GP-LVMs with a nonlinear autoregressive model in the latent space. Instead of generating the movements of both avatars, we replace one of them with motion-capture data from the human interaction partner, and determine the best response of the other avatar via bottom-up Bayesian inference followed by a top-down generative pass through the GPDM.

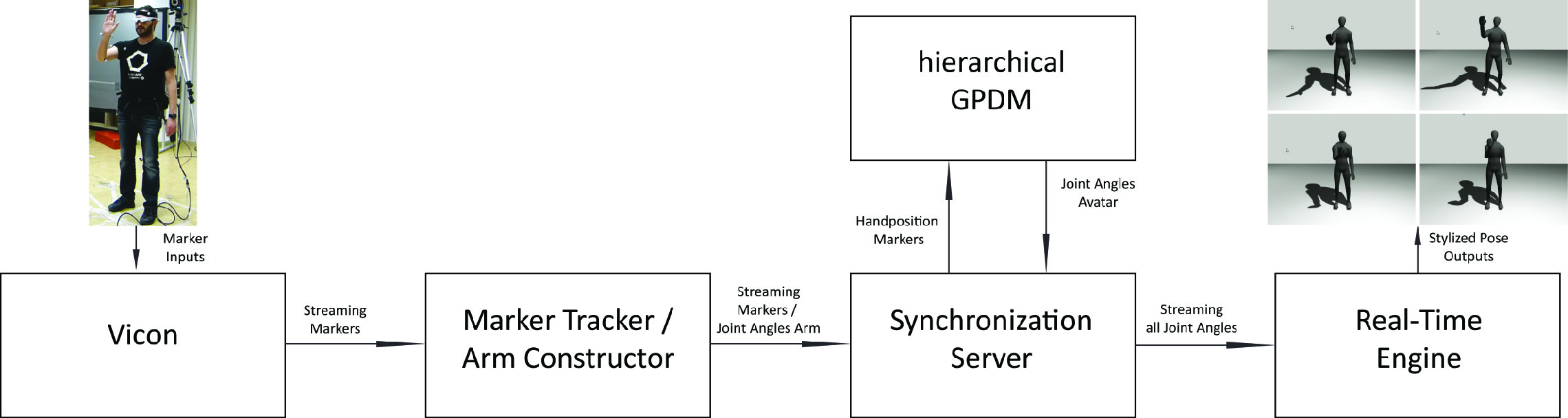

Our complete experimental system setup was presented in [TaubertSAP13] : we developed a pipeline from a Vicon motion capture system to a game engine. Head and arm marker positions of the human participant are identified in real-time using Vicon Nexus software, which sends marker positions to the marker tracker module. I developed and implemented this module. It uses a Bayesian tracking model, similar to a Kalman Filter, to infer the positions of occluded markers. The joint angles of a human actor's arm are computed from the complete marker set, and sent to the synchronization server. The purpose of this server is to synchronize the data streams of the human participant with that of the motion synthesis model. One of the computationally most expensive parts of the model is the inference of the latent variables from the observed motion capture data of the human interaction partner. For real-time capability, we therefore implemented fast approximate inference of the latent variables via back-projection with a GP, an approach that was inspired by the Helmholtz Machine [Dayan et al., 1995]. Both human and avatar model are then sent on to the Ogre engine for rendering, which runs with 68 fps on average in our setup. The rendered scene is displayed on a head mounted display worn by the human participant. Preliminary psychophysical test indicate that the interactive motions thus generated are perceived as being very natural.

Above: the interactive system layout. From left to right: human marker positions are recorded with a Vicon system. A marker tracker infers the positions of occluded markers, the human arm is constructed from the full marker set. A synchronization server streams the arm representation and the interactive response motion generated by the hierarchical GPDM to the Ogre 3D Real-Time Engine for rendering. The resulting movie is displayed on a head-mounted display worn by the acting human (far left). Below: the system in action. From [TaubertSAP13]