Higly realistic monkey body avatars with veridical motion

Description:

For studying the neural and computational mechanisms of body and social perception, neurophysiological experimenters need highly controllable stimuli, specifying exactly, for example, viewpoints, pose, or texture. Such stimuli can be realized by computer graphics and animation. However, the realism of such stimuli is essential if one wants to study the mechanisms of the processing of real bodies by the visual system. In terms of stimulus generation, this defines a challenging task in order to generate avatars with highly realistic appearance and showing realistic body motion. Traditional approaches in humans, such as marker-baser motion capture, do not transfer easily to animals, so that other solutions have to be developed. Available video-based marker less approaches either are not accurate enough for full 3D animation of body models, or they require prohibitive amounts of hand labelling of poses in individual frames. Combining recent advances in Computer Vision, Convolutional Neural Networks (CNNs) we develop methods that realize sufficiently accurate marker less tracking with very limited amounts of hand labelled tracking data, exploiting multi-camera video-based tracking. Based on this approach we are able to generate highly realistic animation of monkeys, which are used to study body-selective neurons in electrophysiological experiments by our collaboration partners.

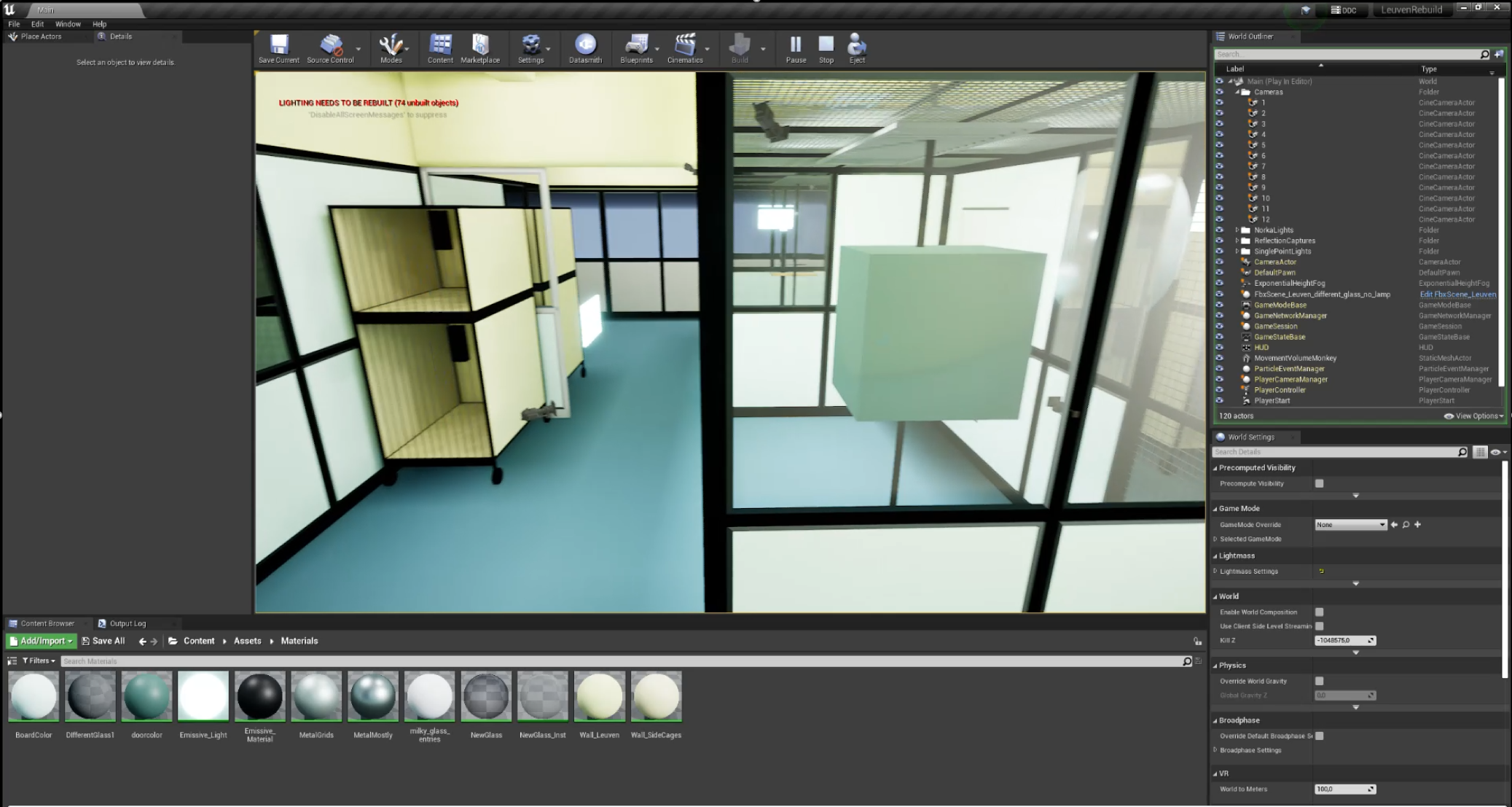

The animal cage was scanned with a 3d scanner, and the scene was reconstructed using the gaming development platform Unreal Engine. This allows for an exact modelling of the camera positions, and the optimization of the recording setup and the camera setup by simulation before the actual motion recordings in the real animal facility. From synchronized multi-camera recordings we track exact 3D positions using deep-learning approaches, exploiting only a small number of hand-labelled multi-camera fames. The generated trajectories, after optimization, are retargeted onto a commercial avatar that is embedded in the Unreal scene. The resulting dynamic scene is extremely similar to a real video, and apparently not distinguishable from such real scenes by monkey observers.

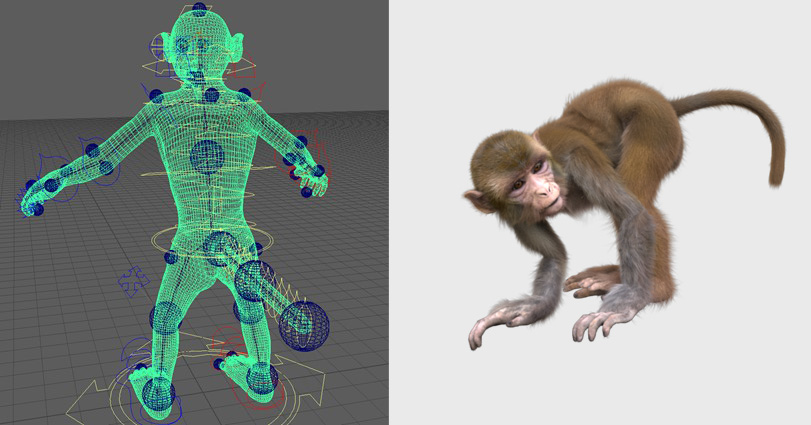

Commercial monkey avatar driven by markerless motion capture (left). Example of the rendered and posed macaque avatar (right).

The recording area as a gaming scene to adjust for light and camera positioning (created out of 3d scans).

Specifically, we scanned the recording area with a 3d scanner and reconstructed the scenery in the gaming development platform Unreal Engine. In this way, we could think about positioning of cameras and equipment before the actual recordings on site. This is especially useful as the environment to record can be very loud and crowded. We recorded videos from several subjects with a synchronized and calibrated multi-camera setup. Out of this data, we generated labels to train action-specific motion trajectories with deep-learning based tracking methods customized for our problem. After optimization, these trajectories are retargeted onto a commercial avatar that is usually done by commercial tools. However, we provide an easy way to use the generated labels and animate a virtual avatar with it. We can then render the avatar at arbitrary angles or change the surroundings. When using the digital copy of the recording area, we can also recreate the original videos synthetically and make not only highly realistic but also controllable stimuli for neuroscience applications.

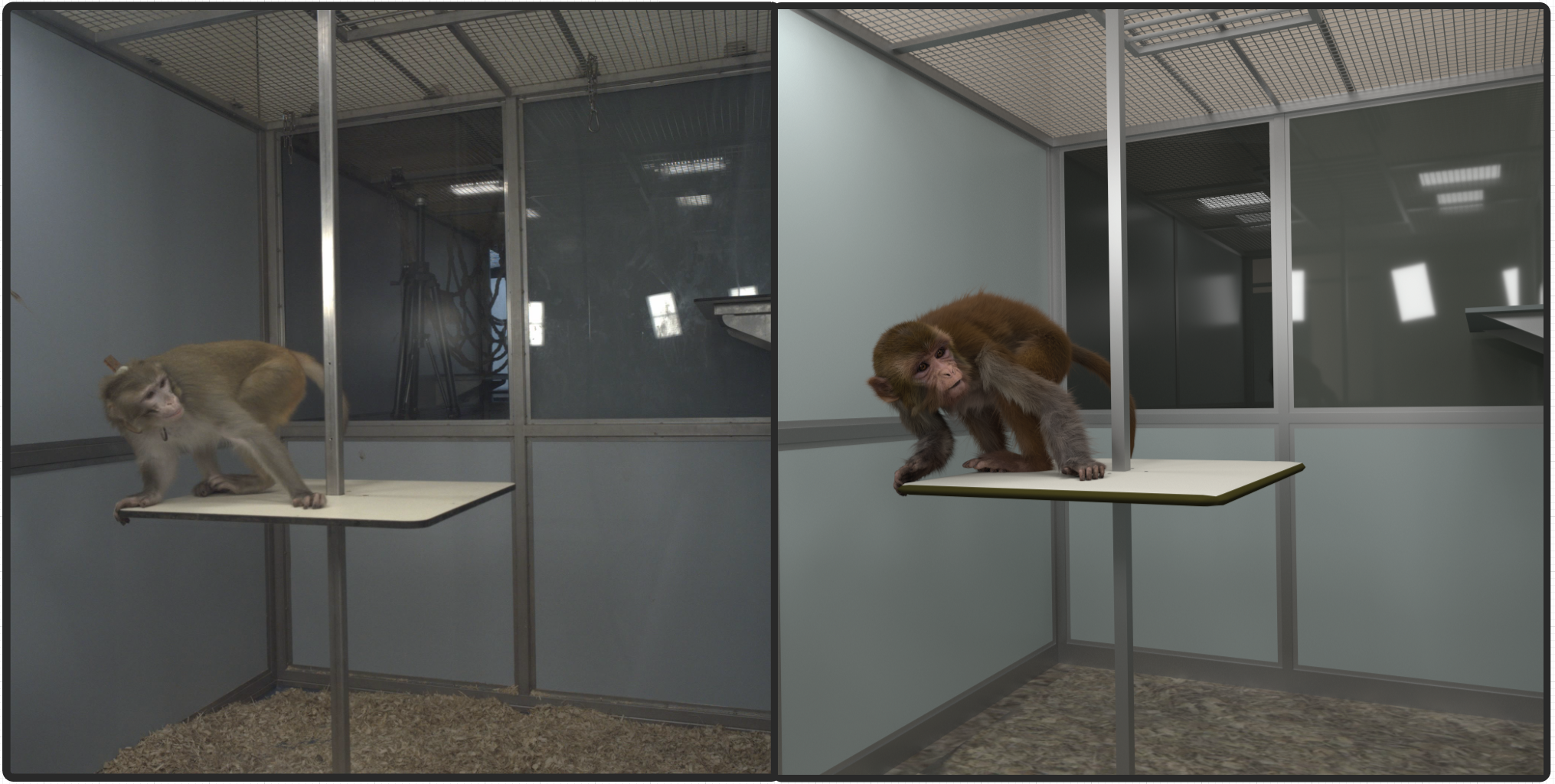

The pipeline generates highly realistic images. The recorded image (left) and the synthetically generated image (right).